Hi, I'm Anastasia 🇺🇦 🇨🇦! I am a Research Scientist at Meta SuperIntelligence Labs, working on data curation and pre-training. I received my Ph.D. at the University of Toronto and Vector Institute, where I was fortunate to be advised by

Brenda Andrews

and Jimmy Ba.

I was lucky to work with many amazing researchers through my internships at

Meta AI,

Meta AI,

Microsoft Research,

Microsoft Research,

AWS, and

AWS, and

Recursion.

More details in my CV.

Recursion.

More details in my CV.

Current work

Currently, I am working on improving capabilities of the next generation of language models at MSL (Meta SuperIntelligence Labs). My focus is data curation and pre-training, improving reasoning and multilingual abilities of the foundation models.Research works

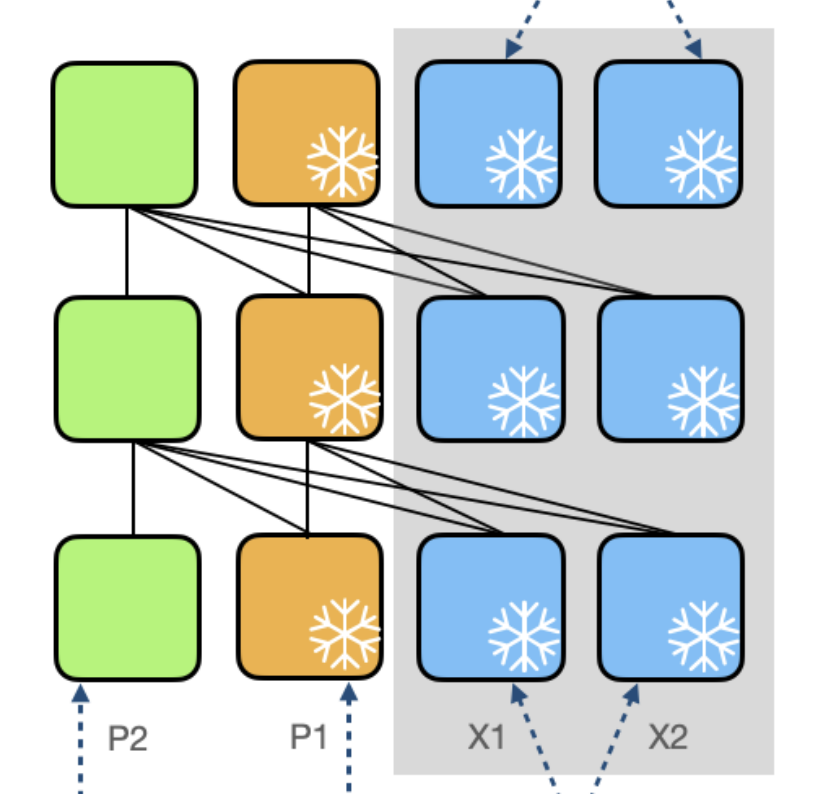

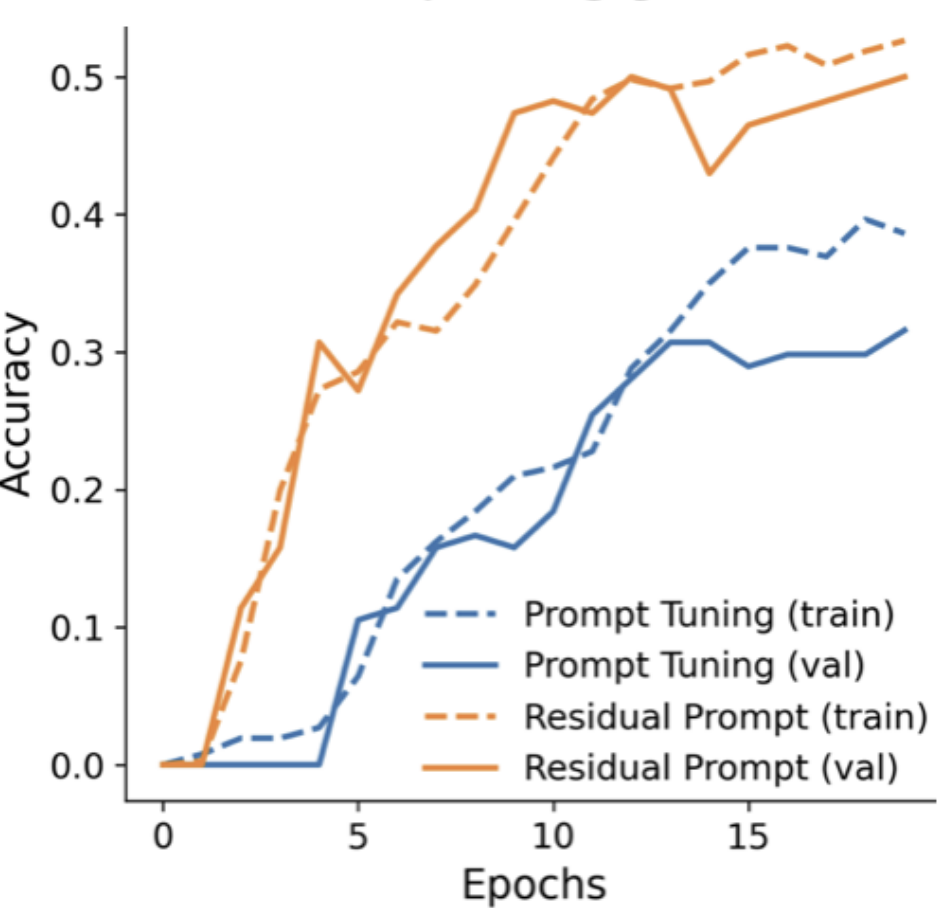

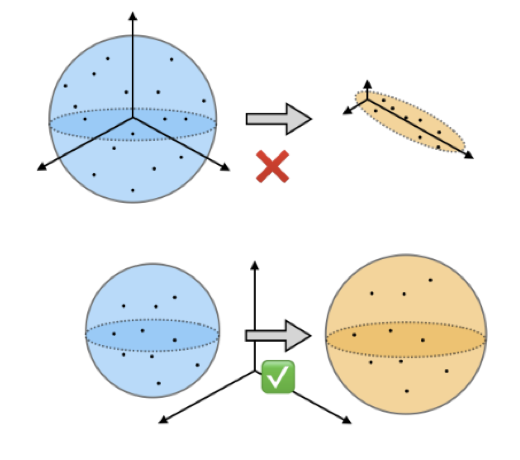

I am interested in developing robust algorithms that can succeed in solving tasks in "real-world" setting, including learning under limited labels, data distribution shift and on multiple tasks. Some of my research interests are:- Efficiency and scalability: how can we make deep learning models more efficient? I am particularly interested in parameter-efficient learning and training with limited labels (Residual Prompts, Progressive Prompts, PIFiA).

- Lifelong learning: humans can learn on a variety of tasks while gaining experience and avoiding forgetting. My goal is to develop effective lifelong learning methods inspired by the core principles of human thinking (Progressive Prompts, Representation Consistency Targets).

Progressive Prompts: continual learning for language models

ICLR 2023

Residual Prompt Tuning: Improving Prompt Tuning with Residual Reparameterization

ACL 2023, Findings

Representation Projection Invariance Mitigates Representation Collapse

EMNLP 2023, Findings

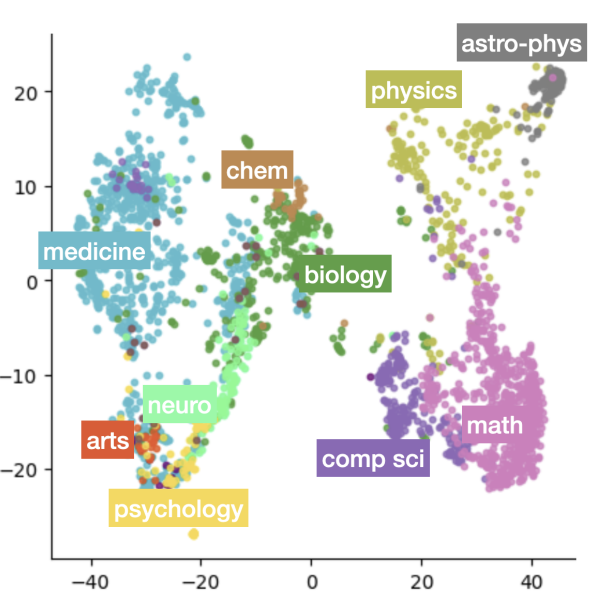

MIReAD: Simple Method for Learning High-quality Representations from Scientific Documents

ACL 2023

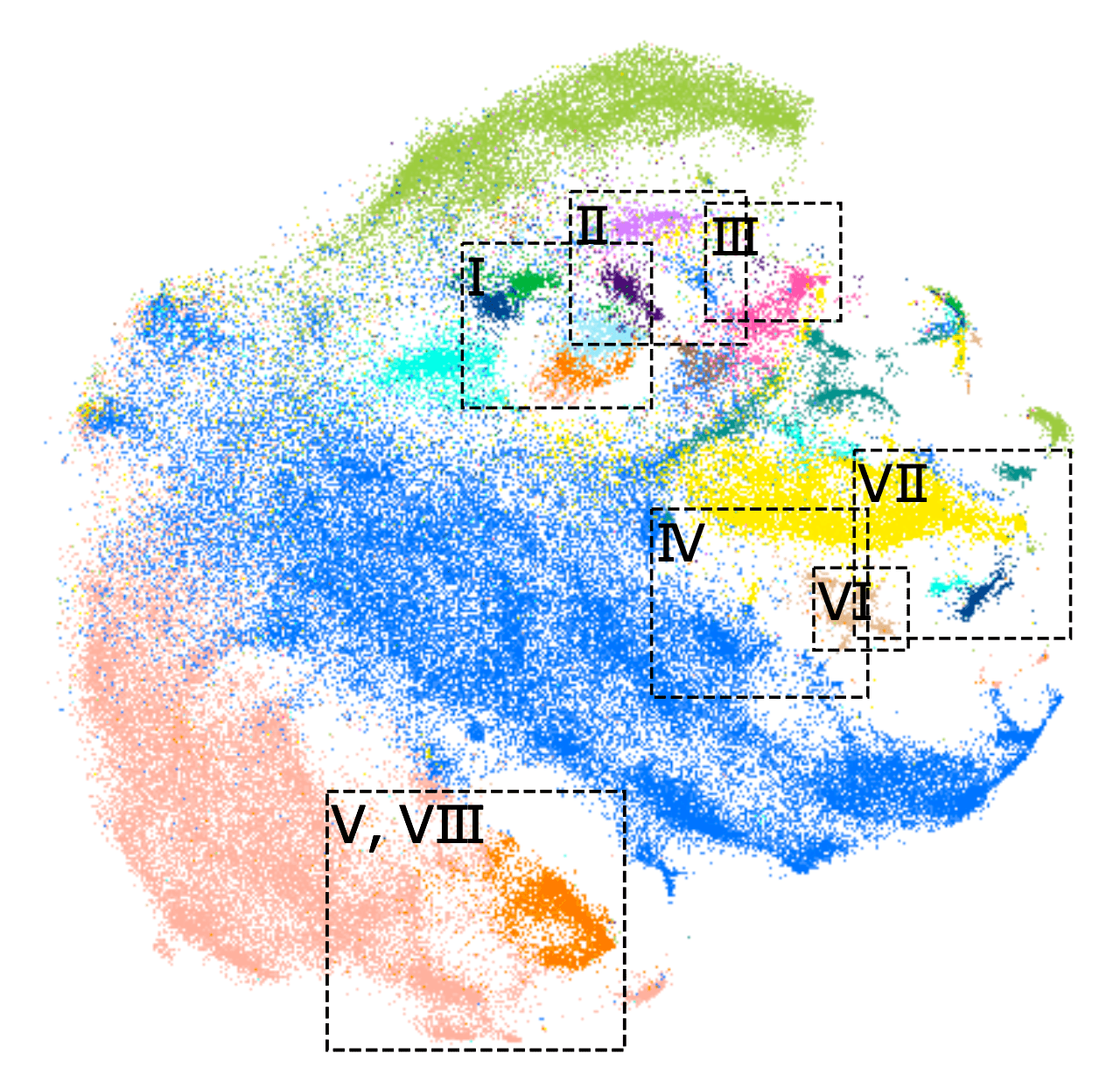

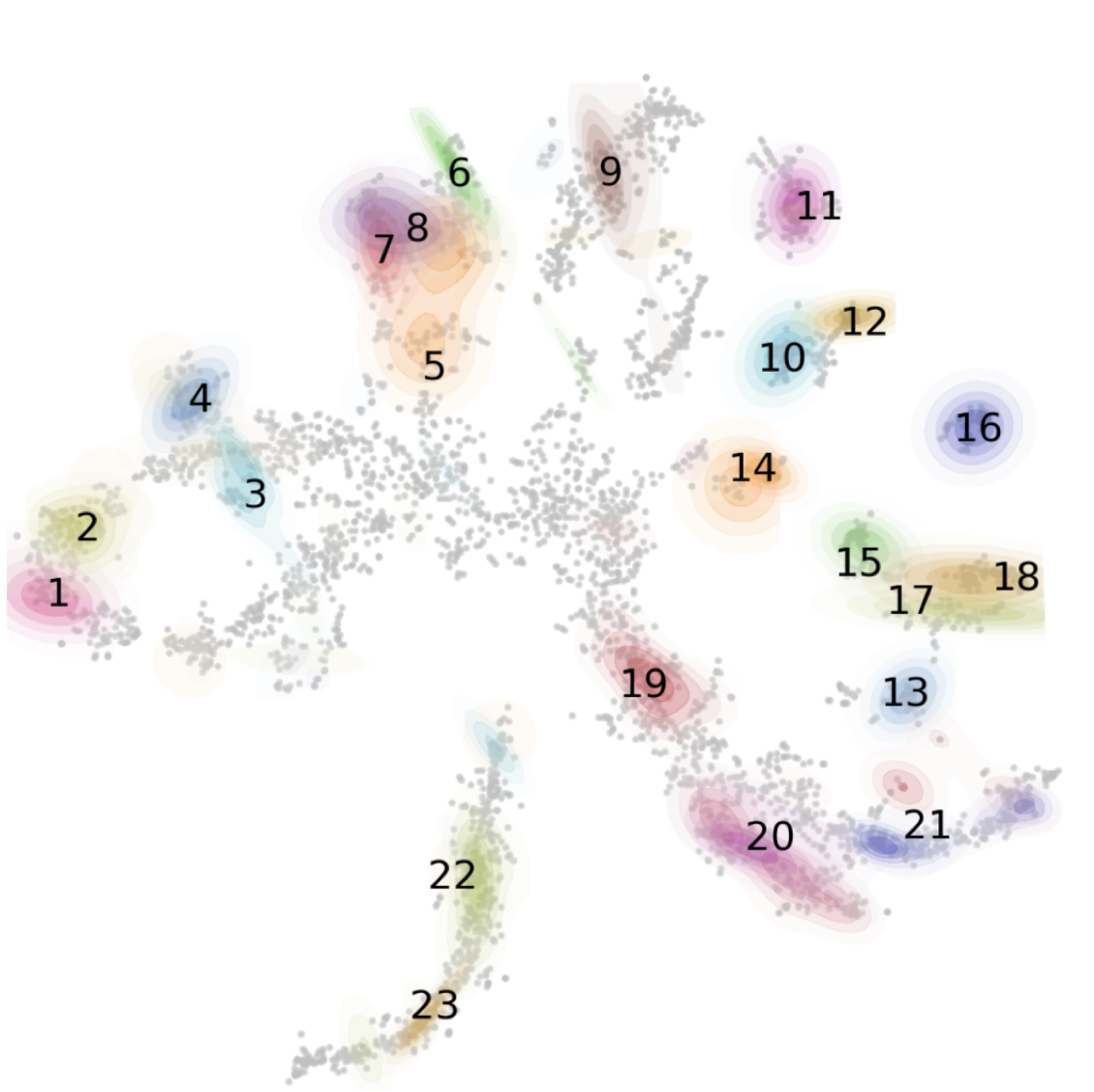

PIFiA: a self-supervised method for protein functional annotation from single-cell imaging data

In submission to Nature Methods

Learning multi-scale functional representations of proteins from single-cell microscopy data

ICLR 2022, MLDD

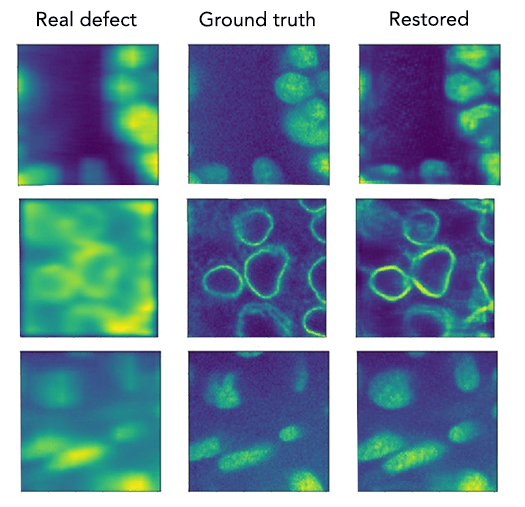

Multi-defect microscopy image restoration under limited data conditions

NeurIPS 2019, Medical Imaging workshop (rated in top-15 submissions)

Experience

Microsoft Research

Microsoft Research

Research Intern, NLP

May 2023 - Aug 2023

Team: Subho Mukherjee, Arindam Mitra, Hamid Palangi, Ahmed Awadallah

Project: Improving instruction tuning for LLaMA-based models via hyperparameter optimization and instruction data selection

Meta (Facebook) AI

Meta (Facebook) AI

Research Intern, AI Integrity & FAIR

Jun 2022 - Dec 2022

Team: Amjad Almahairi, Mike Lewis, Madian Khabsa, Yuning Mao, Rui Hou

Project: Prompt tuning-based Continual Learning for Language Models

-

Amazon Research

Amazon Research

Applied Scientist Intern, AWS

May 2021 - Oct 2021

Team: Vivek Madan, Daniel Khashabi, Ashish Khetan, Vishaal Kapoor, Zohar Karnin

Project: Stabilizing Fine-tuning of Language Models

Recursion Pharmaceuticals

Recursion Pharmaceuticals

Data Science Intern, Computational Drug Discovery

Jun 2020 - Sep 2020

Team: Jes Ford, Berton Earnshaw, Jason Yosinski, Imran Haque

Project: Representation Learning for Drug Discovery

Talks

- Talk at FAIR: continual learning for language models without forgetting